SOC Technology Failures — Do They Matter?

SOC Technology Failures — Do They Matter?

Most failed Security Operations Centers (SOCs) that I’ve seen have not failed due to a technology failure. Lack of executive commitment, process breakdowns, ineffective workforces (often a result from poor management and lack of commitment … again) and talent shortages have killed more SOCs than any and all technology failures.

As we are working on the next SOC paper jointly with Deloitte (paper 1, paper 2, paper 3 coming out really soon), we came across the need to review some of the current technology challenges in the SOC. Hence this blog was born.

BTW, if somebody wakes me up at 3:00 a.m. and says “Anton, what is the top reason why a security operation center may fail?” I would name the loss of executive commitment. I have seen too many SOCs that decayed over time as management lost interest in their excellence, then in their performance and finally in their existence… Some of the noted breaches in the last decade can be traced to a SOC that was developed, refined and improved and then left to deteriorate (naïve outsourcing often played a role of a final nail in the coffin). On the flipside, there is nothing better to revive the presence of the SOC other than a major security incident — the trick is to keep the momentum going for years afterwards…

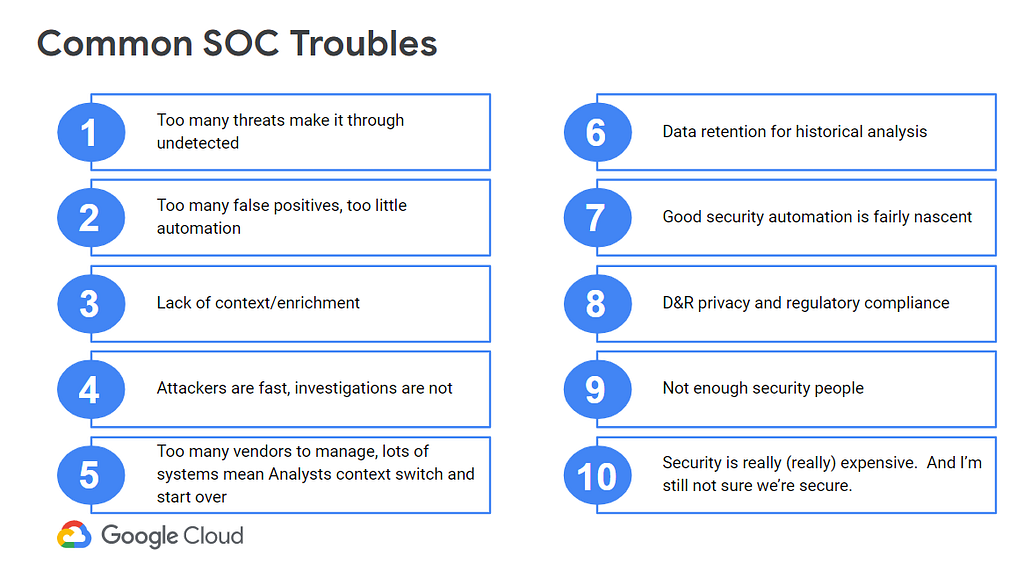

But I digress. Let’s stick to mostly technology focused failures. An astute reader will notice that in the list below, some of the purported technology failures are really process failures in disguise. I apologize for this in advance 🙂

Scaling failures — Often when you get to POC an impressive new tool, the ability to scale is not truly understood and battle-tested until you onboard the tool into your workflow. There can be several dimensions to scaling from the ability to consume data, process data, store data, and make sense of all your unique data types at the scale you need it to be. Even the way you use the tool may cause unseen bottlenecks and affect your ability to scale. It’s far too often that vendors showcase a product’s abilities in it’s best and perfect use case, without little regard to scale. In other instances, the tool may have strong engineering behind it and truly have an ability to scale to many use cases, but a terrible user experience that makes everyone dread using it with the data volumes at hand (think drop-down menus with 2300 entries…) Or, the tool works, but only as long as you down-scope your collection efforts to below your actual security needs. Finally, the tool may “scale physically, but not economically” i.e. it will run at scale you need, but nobody can realistically afford it …

Tool deployed and then not operationalized sounds like a process failure, or a people failure. I lamented on this back in 2012, and this affliction has not truly subsided. However, why are some tools sitting unused in those boxes while others develop an active and passionate user bases? You don’t think it can be about the tool at all? Perhaps the tool vendor made some incorrect assumptions about how their technology is really used in the real world? Or, overestimated it’s value a bit for a particular type of client? Or a client may have thought that deploying the tool and turning it on was “self-service” — only to realize that they should have paid for the consulting partner to make it work.

In other cases, the tool “does runs, but does not work” (my name for failure to design the tool to be usable in real-world environments). There are also piles of tools that are deployed and used in production — but only 10% of the capabilities are utilized. Some technologies seem valuable, but are such a burden to maintain and use in real life that it is practically impossible. SOC should not spend time / resources managing such technologies. If your key SOC technology (whether you call it a SIEM or not) is that hard to manage, toss it, buy SaaS-based technology.

All in all, SOCs that have to manage too many tools suffer. A pile of boxes (yes, and cloud services) that need care and feeding, tuning, refining can overwhelm even a large team. Buy what you would use, and use what brings value!

Shiny new tool syndrome is still rampant in some SOCs. A new CISO comes in, tries to champion the implementation of a new tool, the CISO is gone after a short amount of time — like most CISOs, and then a new CISO comes in and tries it all over again. And tools multiply, getting less shiny day by day. Or, the SOC team champions a build-first strategy in areas where it is better to buy — as they figure out that building scalable solutions with open source tooling becomes way more challenging than initially thought. Yes, DIY SOC tools fail as well.

Data collection failures still plague many SOCs. Now, again, one can also blame this on people and processes (especially, those people in IT who just didn’t give us the data). However, in many cases it is in fact the tools (such as when a pre-cloud security monitoring tool is aimed at the cloud). Data collection does not stop at getting the data, because things like the lack of uniform data model, challenges with getting value out of raw data that is not enriched belong in the same category.

One sided visibility stack is definitely a tool challenge as well. A SOC that only uses a SIEM, only uses an EDR, or (if you are crazy) only uses an NDR is missing out. As I noted in the SOC visibility triad discussion (2020 refresh), there is a decent chance that in the near a future a SOC that uses a 2015-style triad of SIEM+NDR+EDR is also missing out, such as on the application security telemetry, as organizations develop more security use cases for observability data.

Somewhat related, old tools that don’t cover new environments sounds like a tool challenge, and the above cloud example works here as well. Frankly, many traditional SOCs suffer with cloud, with containers and with other modern IT technologies and environments (old example). You don’t need to buy the whole lot (CWPP, CSPM, CASB, SSPM, CNAPP, etc), but you do need to be mindful of public cloud visibility gaps in your SOC.

Along the similar line, tools that promise “a single pane of glass” usually don’t deliver that. Don’t wait for a vendor to invent a “single pane of glass”, it either won’t come, or it will come out of the box “pre-broken.” I am not sure if the mesh is the answer either. Note that integrated tools are hard to adopt if you already have siloed tools that are at least partially successful (will you ). Would you buy an XDR that includes an EDR if you are happy with your different EDR?

Automation is sometimes more work than value — even with SOAR. Again, this may be seen as a people challenge (“hey, you just don’t have enough security developers in your SOC ….oh wait … you have none”). In real life, automation is of course a benefit, but it does require work to deliver. Indeed, this reads like another people/process challenge, but perhaps tools can eventually deliver real — not excessively optimistic — low code/no code security automation?

Thanks to Iman Ghanizada for the ideas and contributions to this post!

Related posts:

- “Kill SOC Toil, Do SOC Eng”

- “Achieving Autonomic Security Operations: Reducing toil”

- “A SOC Tried To Detect Threats in the Cloud … You Won’t Believe What Happened Next”

- “New Paper: “Autonomic Security Operations — 10X Transformation of the Security Operations Center””

- “New Paper: “Future of the SOC: Forces shaping modern security operations””

- “New Paper: “Future of the SOC: SOC People — Skills, Not Tiers””

- “Beware: Clown-grade SOCs Still Abound”

SOC Technology Failures — Do They Matter? was originally published in Anton on Security on Medium, where people are continuing the conversation by highlighting and responding to this story.

*** This is a Security Bloggers Network syndicated blog from Stories by Anton Chuvakin on Medium authored by Anton Chuvakin. Read the original post at: https://medium.com/anton-on-security/soc-technology-failures-do-they-matter-8abcffdc2cb5?source=rss-11065c9e943e------2