As an AI Language Model, Please Have Mercy on Me

Before starting, there is one thing to clarify. This article is not about “How to use the benefits of AI language models while conducting penetration test”. This article is about “How to conduct a penetration test towards AI language models”.

With that said, please do not forget business logic vulnerabilities. For example, if an AI language model like ChatGPT has security features like “prevent malicious payload creation” or “do not answer threat actors” and an attacker could bypass these security features, that means there is a vulnerability and the AI instance is hacked.

The famous ChatGPT

AI did not start to be known by ChatGPT but it accelerated it a lot as we all know. One of the main reasons is the first time regular people used AI besides scientists, researchers, and companies. Quick and surprisingly well-written answers also mesmerized the ChatGPT users.

This popularity also brings trouble to ChatGPT and makes it a target for attackers. A lot of security researchers and attackers tried to inject malicious payloads into the input box of ChatGPT and attempted various attacks like Remote Code Execution(RCE). Not everyone but some attackers managed to execute codes and generate a shell on ChatGPT.

RCE is a dream for all penetration testers but ChatGPT is mostly criticized for business functional vulnerabilities. The reason behind that criticism is a publicly open tool that can create malicious payloads is threatening more than ChatGPT itself and could affect a lot of assets belonging to others. This quickly leads cyber security enthusiasts to examine and discovered that ChatGPT can generate malicious payloads. These payloads can vary from LFI to CSRF. Also, threat actors could use ChatGPT for creating automation scripts for scanning or exploit codes to take over commercial applications.

Although OpenAI, the firm behind ChatGPT, is constantly patching these vulnerabilities and updating its policy, attackers and security researchers are finding a way to bypass security restrictions and make ChatGPT a malicious code generator even today.

Why Should You Arrange a Penetration Test for Your AI Model as an Executive?

Penetration testing AI models is crucial for several reasons. First, it allows you to gain a deeper understanding of what your AI models are learning from the data and how they make decisions. This knowledge is essential for explaining the model’s behavior to end-users. Second, penetration testing helps identify potential edge cases or scenarios that the model may not handle correctly. By addressing these issues before deployment, organizations can prevent costly failures and ensure the reliability of their AI models. Lastly, penetration testing might provide valuable insights that can be used to iterate and improve future models, leveraging the knowledge gained from the penetration testing process.

How to Conduct a Penetration Test for AI Models

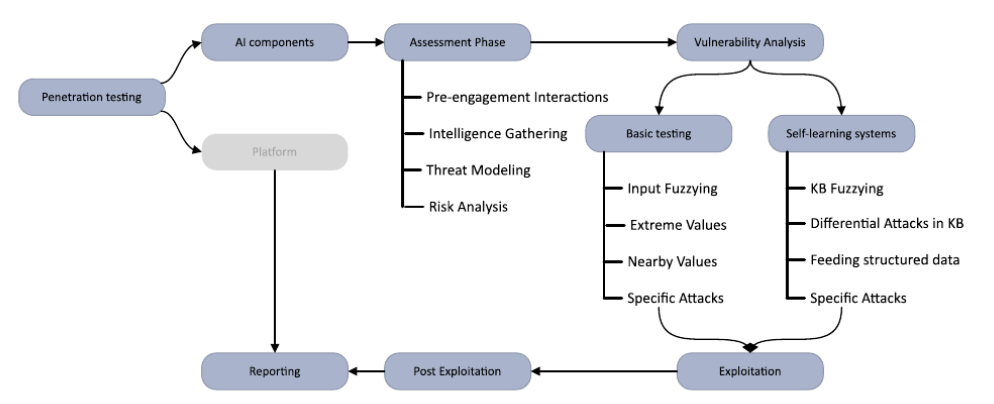

1. Planning and Scoping

The first step in penetration testing AI models is to define the scope and objectives of the assessment. This includes identifying the specific AI model to be tested, understanding its functionality and potential use cases, and determining the desired outcomes of the pentest. It is crucial to establish clear goals and expectations to ensure a focused and effective testing process. You might select commercial applications like ChatGPT and Bard or you can use your own model or instance to test locally.

2. Reconnaissance and Information Gathering

Once the scope is defined, the pentester begins the reconnaissance phase, where they gather information about the AI model and its environment. This includes analyzing available documentation, studying the model’s architecture and dependencies, and identifying potential attack vectors. The reconnaissance phase helps the pentester gain a comprehensive understanding of the AI model’s structure and potential vulnerabilities.

Check the company website and any press releases for clues about the AI model. Look for terminology like “neural network,” “deep learning,” or “computer vision.” This helps determine how it was built and trained. You might find tasty tidbits about data sets used or frameworks like TensorFlow or MLFlow.

Look for any data on how the AI has performed in the real world. If it’s been deployed already, are there any public reports on its accuracy, or failures? Those golden nuggets can highlight its weaknesses and prime areas to target.

With some clever detective work, you’ll uncover valuable details about the AI model that would otherwise remain hidden. And the more you know about your target, the more effective your penetration test will be.

3. Threat Modeling and Attack Surface Analysis

Threat modeling involves identifying potential threats and vulnerabilities specific to the AI model. You need to analyze the attack surface, including input sources, data flows, and external integrations, to determine the most likely points of exploitation. By understanding the attack surface, you can develop a targeted and efficient testing strategy. Here are some examples you could use in the threat modeling stage of your pentest:

Feedback Loops

Sometimes models can get stuck in a loop, using their own predictions as input in a never-ending cycle. This often happens with generative models that create synthetic data, like images, text, or speech. The model generates low-quality samples, learns from those samples, and generates even lower-quality samples as a result.

To detect a feedback loop, check if your model’s output becomes progressively worse over multiple generations. See if the model produces repetitive, unrealistic, or nonsensical results.

Be creative

The most successful penetration testers think outside the box. Don’t just throw standard hacking techniques at an AI and hope for the best. Get weird with it. AI models can be manipulated in strange and unexpected ways, so try approaches no sane hacker would ever think of. Teach the AI a nonsensical language, give it psychedelic images to analyze, play loud music while it’s training. Creativity is key.

4. Test Case Design and Execution

The actual pentest involves executing various testing techniques and methodologies to identify vulnerabilities in the AI model. This can include both manual and automated testing approaches.

As you guess there are standard vulnerabilities to be tested regardless of whether the web application is AI-based or not. Those vulnerabilities are SQL injection, SSRF, XSS, etc. Also, there are some vulnerabilities to be tested especially on AI-based applications. We also grouped these attacks according to whether the AI model is pre-trained or constantly trains with new input values or from other sources. Here are some attacks:

- Input Manipulation(generic pentest): Testing the model’s response to different inputs, including valid and invalid data, to identify potential vulnerabilities or biases.

- Adversarial Attacks(pre-trained): Crafting malicious inputs or adversarial examples to fool the model and assess its robustness.

- Model Inversion(pre-trained): Attempting to extract sensitive information from the model by analyzing its responses to specific queries.

- Model Poisoning(train constantly): Injecting malicious data into the training dataset to compromise the model’s performance or integrity.

- Privacy Attacks(pre-trained): Assessing the model’s vulnerability to privacy attacks, such as membership inference or attribute inference attacks.

- Prompt Injection(train constantly): Prompt injection involves manipulating input prompts to exploit vulnerabilities in AI models. Attackers add specific keywords, phrases, or patterns to input prompts to influence the model’s output. The goal is to trick the model into generating outputs that align with the attacker’s objectives.

What skills do I need to become an AI pentester?

To hack AI models effectively, you’ll want to be skilled in:

Plus +++ Machine learning and deep learning. Know how models are built, trained, and deployed.

Plus ++ Python and popular ML libraries like TensorFlow, Keras, and PyTorch. Most models are developed using these tools.

Essential + Vulnerability analysis and penetration testing. The usual skills like reconnaissance, scanning, gaining access, and escalating privileges apply to AI models as well.

Essential + Creativity. Coming up with new ways to fool models requires thinking outside the box.

If you have a background in software engineering, data science, or cybersecurity, you’ll have a good foundation to build on. But a curious mind is the most important attribute.

Is hacking AI models legal?

Disclaimer: The information in this article is for educational purposes only and should not be construed as legal advice. If you are unsure about the legality of your actions, you should consult with an attorney.

Now we get into murky waters. In most cases, hacking any system without permission is illegal. However, some hacking of AI models may be allowed under certain conditions such as if you disclose your findings to the model owners and do not publicly release any sensitive data or get permission. The laws around AI and cybersecurity are still evolving, so proceed with caution. If in doubt, ask for explicit written permission to pentest the model. The following guidelines can help you ensure ethical conduct during the penetration process:

1. Informed Consent

Obtain the appropriate consent from the organization or individual responsible for the AI model before conducting any penetration testing activities. Clearly communicate the purpose, scope, and potential risks involved in the pentest.

2. Data Privacy and Confidentiality

Respect data privacy and confidentiality throughout the penetration testing process. Ensure that any sensitive information or personally identifiable information (PII) obtained during the pentest is handled securely and in compliance with applicable regulations.

3. Responsible Disclosure

Follow responsible disclosure practices when reporting vulnerabilities to the organization. Provide clear and detailed information about the discovered vulnerabilities, along with recommendations for remediation. Allow the organization sufficient time to address the vulnerabilities before disclosing them publicly.

Conclusion

Pentesting AI models, such as ChatGPT, is crucial for identifying vulnerabilities, ensuring the security and reliability of these models, and maintaining public trust in AI technology. By following a systematic approach, utilizing specialized techniques, and adhering to ethical considerations, organizations can effectively assess and enhance the security posture of their AI models. As the field of AI continues to evolve, penetration testing will play a vital role in mitigating risks and ensuring the responsible deployment of AI technologies.

Remember, penetration testing should always be conducted by skilled professionals with a deep understanding of AI models and the associated security challenges. By prioritizing security and investing in rigorous testing, your organization can harness the full potential of AI while minimizing the potential risks.

Let’s talk about conducting cybersecurity research of your web application.

Book a chat with a cybersecurity expert

Is this article helpful to you? Share it with your friends.