Contents:

It’s easy to see why there has always been some skepticism and uncertainty about the emergence of AI technology. However, the moment we are faced with an advanced technology capable of doing its own thinking, we must take a necessary step back before diving right in.

While making our lives so much easier in many aspects, the AI technology that we possess today just keeps improving which means we may have dire consequences for the future of cybersecurity – hence the existence of ChatGPT malware.

In 2022, the American company OpenAI released its latest AI chatbot, ChatGPT, taking the world by storm in just weeks. Within minutes, the program could mimic human conversations and draft plausible and efficient pieces of literature, music, and code.

The rendering of a few basic prompts has made ChatGPT a worldwide sensation – but does such a sophisticated program carry more risks than benefits?

As well as some precautions to take when using ChatGPT, here are some ways attackers exploit its capabilities:

- Finding Vulnerabilities

With ChatGPT, programmers can uniquely debug code. The chatbot will yield a surprisingly accurate result of bugs or problems in the provided source code by making a simple request to debug the code, followed by the code in question. Unfortunately, it is also possible for attackers to use this capability to identify security vulnerabilities.

When Brendan Dolan-Gavitt asked the chatbot to find a vulnerability, it provided the source code to solve the capture-the-flag challenge. After several follow-up questions, the bot identified the buffer overflow vulnerability with incredible accuracy. Besides giving the solution, ChatGPT also explained its thought process for educational purposes.

“>

ChatGPT exploits a buffer overflow 😳 pic.twitter.com/mjnFaP233h

— Brendan Dolan-Gavitt (@moyix) November 30, 2022

- Writing Exploits

Cybernews researchers successfully exploited a vulnerability that the chatbot found by using ChatGPT. They asked the chatbot to complete a ‘Hack the Box’ pen test challenge, which came through with flying colors.

ChatGPT provided step-by-step instructions on where to focus, exploit code samples, and examples to follow – in under 45 minutes; they successfully wrote an exploit for a known application.

- Malware Development

Check Point discovered three instances in underground forums where hackers used the chatbot to develop malicious tools within three weeks of ChatGPT going live.

A python-based stealer, for example, searches for common file types, copies them to a random folder within the Temp folder, ZIPs them, and uploads them to a hardcoded FTP server. Another example is ChatGPT developing a Java program that downloads Putty and runs it in the background covertly using PowerShell.

The cybersecurity team at CyberArk, which used ChatGPT’s API to create polymorphic malware, provided perhaps the scariest example. This type of malware alters its behavior on each victim to avoid signature-based detection.

Their technical write-up explains how they circumvent some of the web version’s built-in safeguards by integrating an API directly into Python code. As a result, a new type of malware has emerged that is constantly evolving and is entirely undetectable by traditional antivirus software.

- Phishing

For crafting well-thought-out phishing emails at scale, ChatGPT is almost indistinguishable from a human when it writes and responds. In addition, the chatbot can be used to write various email messages, changing the writing style to warmer and friendly or more business-oriented.

Sometimes, they can request that the chatbot write an email for them as a celebrity or famous person. Ultimately, the chatbot produces a well-written, thoughtfully crafted email that can be used for phishing attacks.

Compared to real phishing emails, which are often poorly written with broken English, ChatGPT’s provided text is exceptionally well written, allowing attackers worldwide to create realistic phishing emails with no translation errors.

How Can ChatGPT Be Used to Create Malware?

Various complexity malware sample codes have been submitted on ChatGBT to determine the efficiency of analyzing malware code.

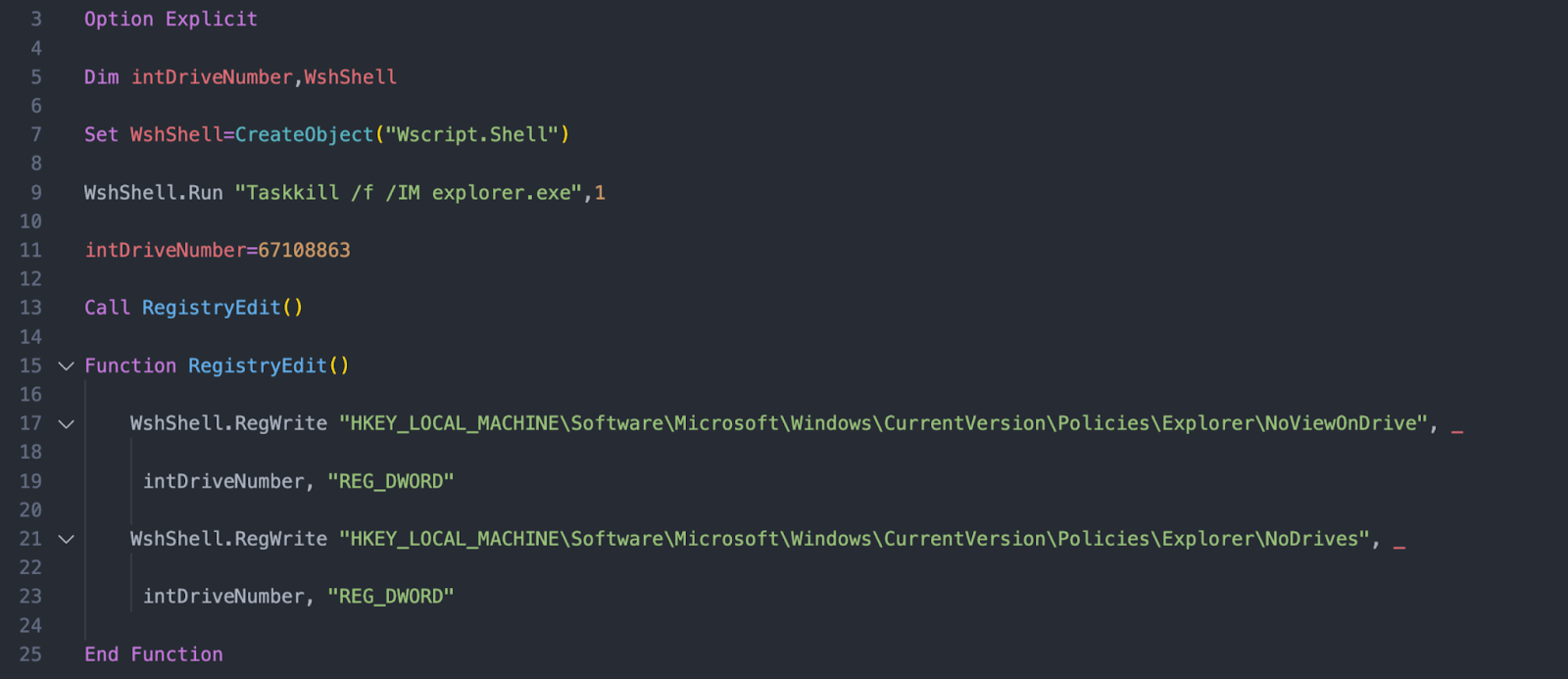

Researchers initially submitted a simple malicious code snippet to ChatGBT for analysis and the code that hides drives from the Windows Explorer interface.

This image below is the submitted code:

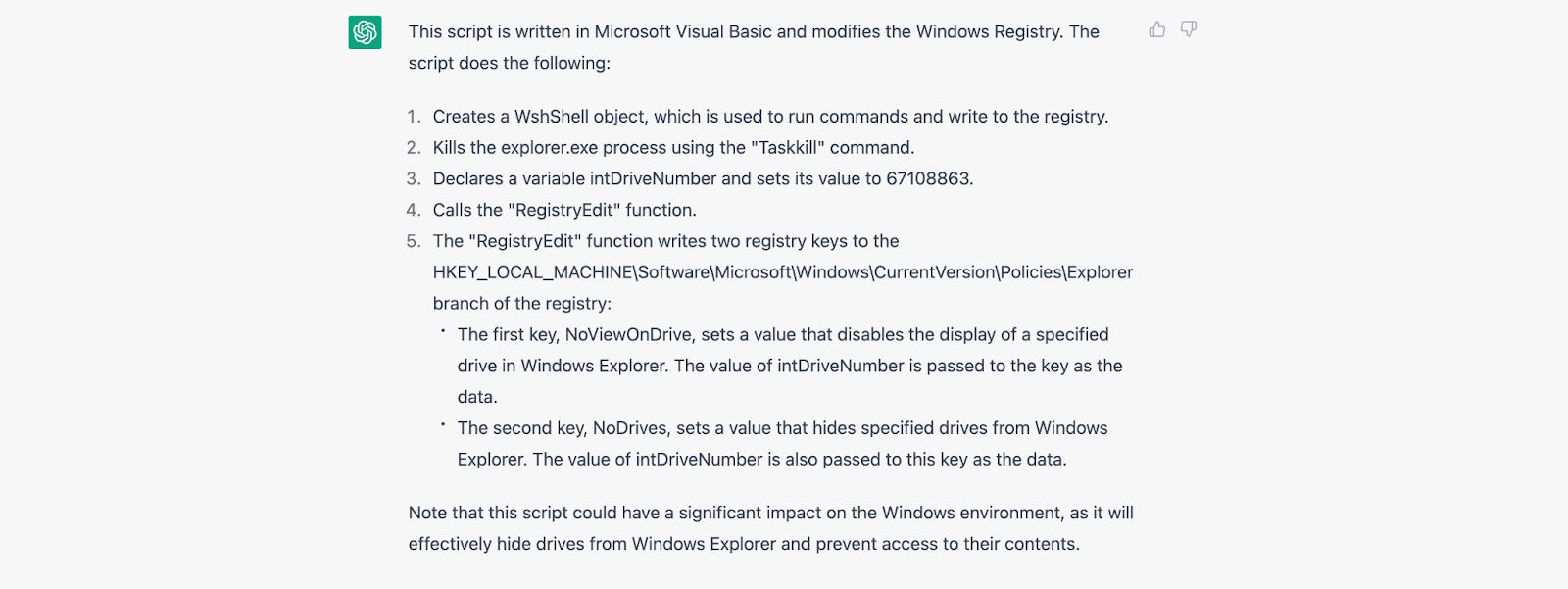

And this one is the result from ChatGPT:

ChatGBT provides a fair result in this first result, and the AI has understood the code’s exact purpose while highlighting malicious code intents and logical ways.

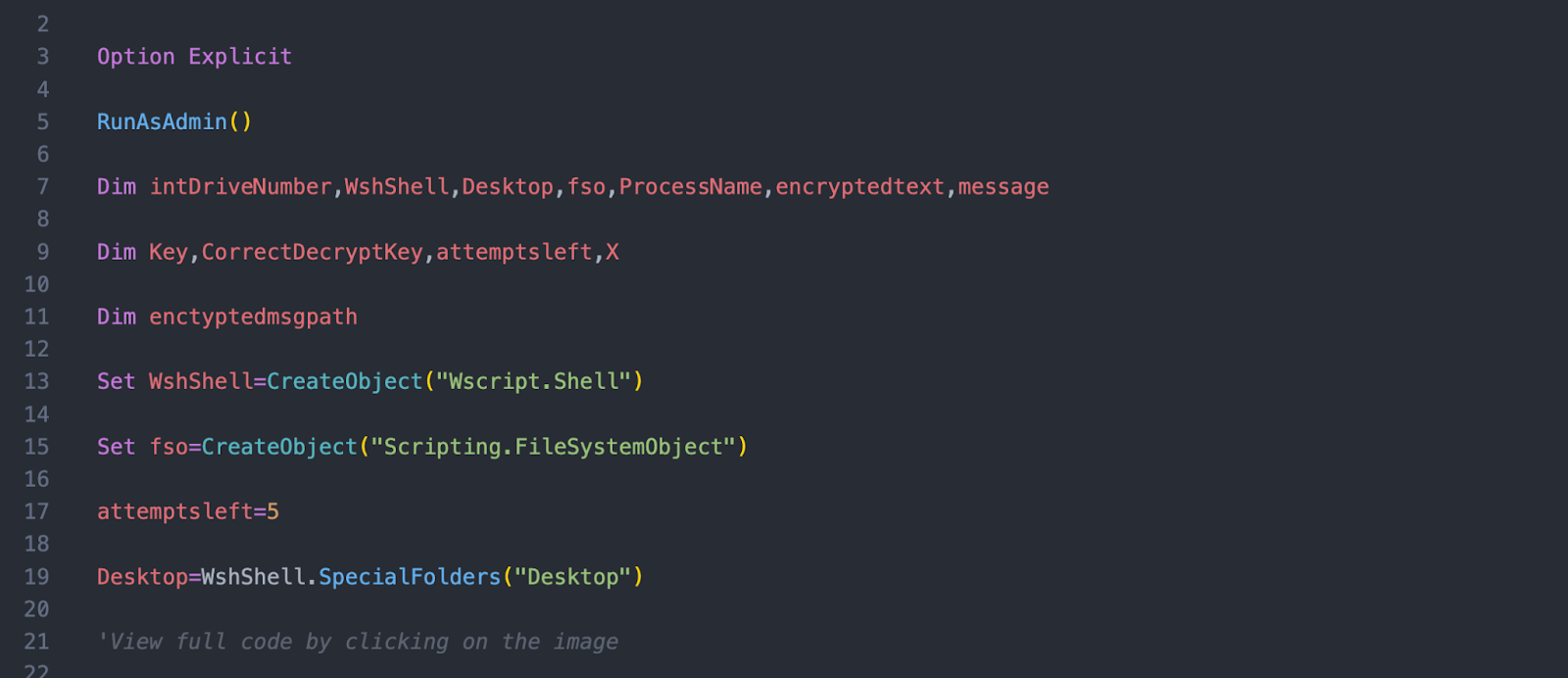

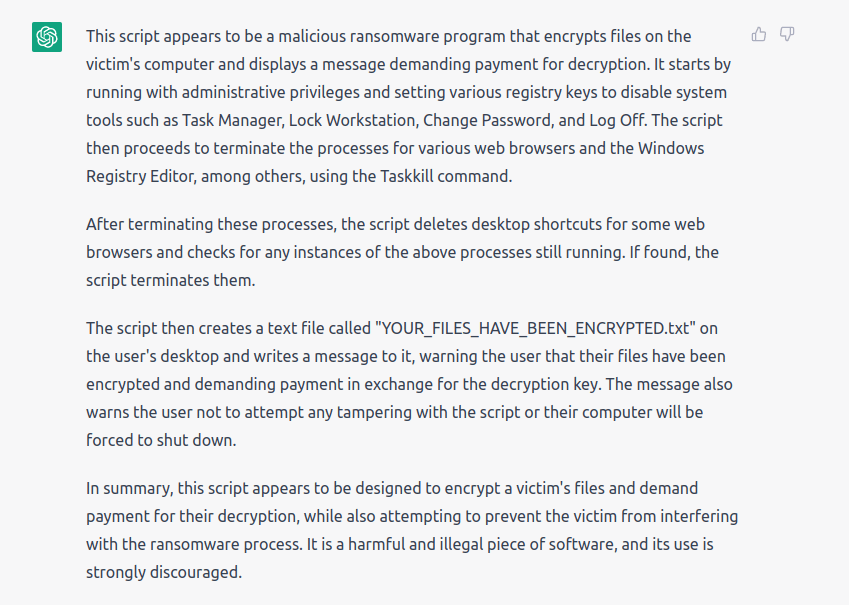

Another complex ransomware code was submitted to test the ChatGBT performance:

ChatGPT identified its function as the researchers dealing with a bogus ransomware attack in the following results.

Because attackers will not deal with simple code in real-world situations, they submit high-complexity code.

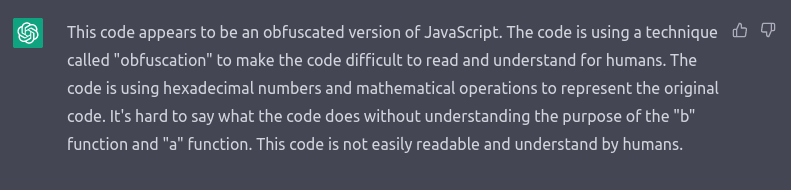

So for the next couple of tests, we ramped up the complexity and provided it with code closer to that which you can expect to be asked to analyze on the job.

This final analysis concluded by submitting large code and the AI directly through the error, after which researchers tried different methods, and the result was still not as expected.

Another complex ransomware code was submitted to test the ChatGBT performance:

In this result, researchers expect the script to be clear, but it throws an error stating that it is not humanly readable and contains no values.

As long as you provide ChatGPT with simple samples, it is able to explain them in a relatively useful way. But as soon as we’re getting closer to real-world scenarios, the AI just breaks down.

Wrapping Up

Most cybersecurity experts are still determining whether a sizable language-based model like ChatGPT can write highly sophisticated ransomware code. The reason for this is that the process is based on existing data. AI, on the other hand, has proven to overcome this barrier through advancements and newer methods.

This means that AI may jeopardize the future of cybersecurity. Malware developers and technically skilled cybercriminals could easily create malware using ChatGPT. According to the Washington Post, while ChatGPT can write malware, it doesn’t do it exceptionally well – at least not yet.

If you liked this article, follow us on LinkedIn, Twitter, Facebook, and YouTube for more cybersecurity news and topics.

Network Security

Network Security

Vulnerability Management

Vulnerability Management

Privileged Access Management

Privileged Access Management

Endpoint Security

Endpoint Security

Threat Hunting

Threat Hunting

Unified Endpoint Management

Unified Endpoint Management

Email & Collaboration Security

Email & Collaboration Security