Label Engineering for Supervised Bot Detection Models

At DataDome, we leverage trillions of signals daily (signatures, behaviors, anomalies, etc.) with different types of ML models and domain expert rules to block malicious traffic for our customers.

Signature-based detection is a core part of our solution, and we leverage thousands of domain expert rules, updated daily, to identify bots and block them straight away. Gathering and maintaining our huge set of rules is a formidable opportunity for a data scientist, as it provides a way to define the coveted “label” for a dataset.

In bot detection use cases, the label expresses whether the request is malicious or not. DataDome’s internal tools and signature-based rules allow our solution to generate labels for billions of requests daily—which opens up opportunities to apply machine learning (ML) in a supervised way.

Creating quality labels for billions of requests is a complicated process requiring various engineering tasks. The engineering process is often referred to as label engineering in the data science community. Let’s walk through some interesting label engineering problems we have encountered and effective ways to solve them.

Domain Expert Labels & Quality Considerations

Signature-Based Rules From Domain Experts

Our very first labels come from domain experts who establish signature-based rules to identify malicious bots. To do so, they leverage data coming from requests’ signatures.

Example of a simple request signature:

![]()

Then, to identify malicious bots, domain experts express a statement matching a specific set of attribute values.

Browser=Chrome AND BrowserVersion < 100

Every request matching the above pattern can be labeled as a bot request using the expressed rule. Combining thousands of rules allows us to identify various types of bot signatures very accurately.

The process is called “programmatic labeling” (as opposed to manual labeling) and it allows us to scale labeling while decreasing the cost.

Improving Domain Expert Labels

Labels from domain experts’ signature-based rules are very useful as our first approach. But, since we want to do supervised ML, we must consider the quality of a label based on its importance in the process of training an ML model. When considering ML training, consistency and correctness of labels are fundamental.

- Consistency is the fact that two identical data samples must have the same label.

- Correctness is the fact that the label must reflect the ground truth.

In many cases, the ground truth is not available but can be approximated using a consensus of domain experts.

Example:

To illustrate consistency and correctness, we can use the bot detection label and consider a data sample as a set of attributes coming from HTTP request headers.

- Consistency Issue: Sample 1 and sample 2 are the same (considering the available attributes) but have different labels. So, the label is inconsistent and we should take action. For example, we may consider we have too few data attributes to differentiate them, so we need to collect more attributes to have distinct samples, which would solve our label conflict. Or, we can discard both samples to avoid the inconsistency.

- Correctness Issue: Sample 3 seems fine, but a domain expert could establish that version 10 for a Mobile Safari browser is really old, so it could be considered as an anomaly. Considering the domain expert is one good proxy for ground truth, sample 3’s label must not be correct. We can take action to correct this label.

Inconsistency may influence the learning phase and negatively impact model performance. In the case of signature-based bot detection, the model wouldn’t be able to discriminate between legitimate and malicious bots (increase of false positives and false negatives).

Incorrectness may result in the learning of unwanted patterns and consequent unwanted model behaviors. In the case of signature-based bot detection, the model would be convinced that a malicious bot is in fact a legitimate user (increase in false negatives).

How to Improve Label Consistency & Correctness

Improving Consistency

Labels come from the aggregation of domain expert signature-base rules. Unfortunately, some rules can overlap and contradict each other resulting in inconsistencies.

In this case, consistency can be improved by discarding incriminated samples, or by leveraging a more advanced approach like weak supervision algorithms.

Improving Correctness

Created rules may not cover the diversity of bot signatures because hackers are constantly innovating. Therefore, creating and updating our rules on a daily basis is very important in the long run.

Due to the inherent nature of bot detection, there are always multiple sources of flaws in label correctness. Some bots cannot be detected using a signature-based only approach because attackers forge everything to make their requests look legitimate. Thus, many requests are wrongly labeled even after applying signature-based rules, which is a primary focus when we perform label engineering.

Creating Labels for Training a Bot Detection ML Model

Obviously, we want to design a label engineering process that can improve both consistency and correctness of our labels coming from domain expert rules.

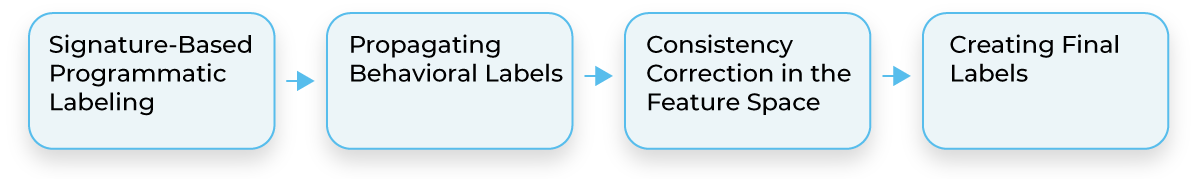

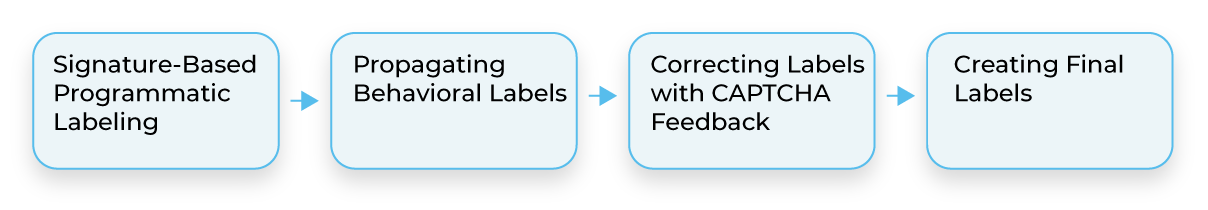

A label engineering process for bot detection.

Step 1: Create the first label from domain expert rules.

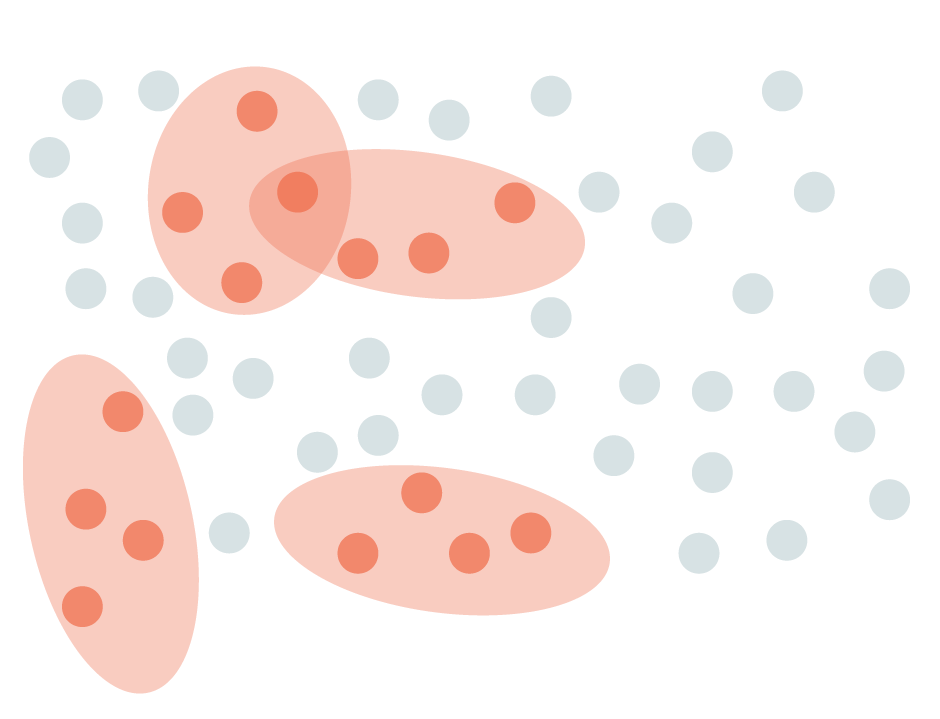

We start by gathering rules from domain experts to create a first (programmatic) label, which results in identifying many samples as malicious bots.

Orange circles represent malicious bots identified by domain expert rules.

Step 2: Improve correctness using behavioral detection.

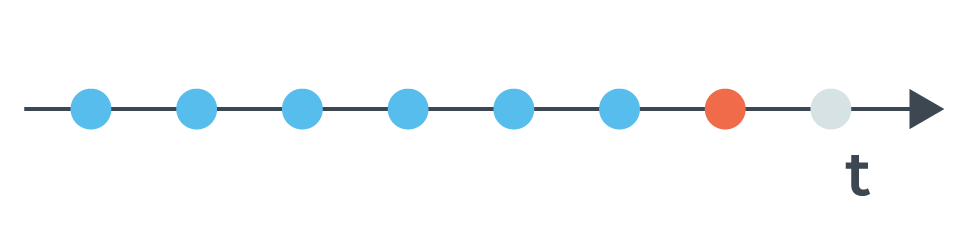

At DataDome, we not only use signature-based detection, but also behavioral detection—which means that we can identify a malicious bot based on its behavior over time. As a result, the bot can perform several requests before being labeled as a bot.

A bot performs several requests in a short period before being identified as malicious.

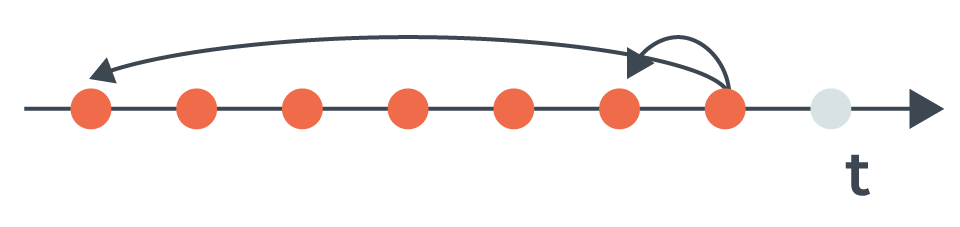

When the bot is identified, we’ll propagate the new label back in time to label every previous hit from the same bot, thus improving label correctness.

Propagation of the malicious label to previous hits.

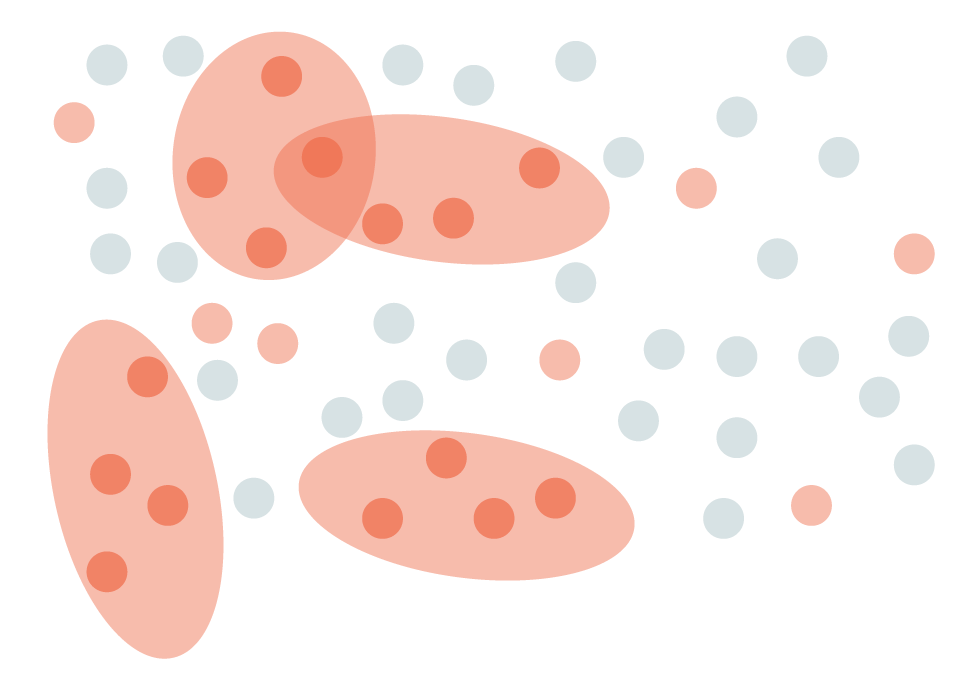

The propagation results in more requests labeled as malicious bots in the entire dataset.

Malicious bots identified by signature-based rules and propagation of behavioral detection.

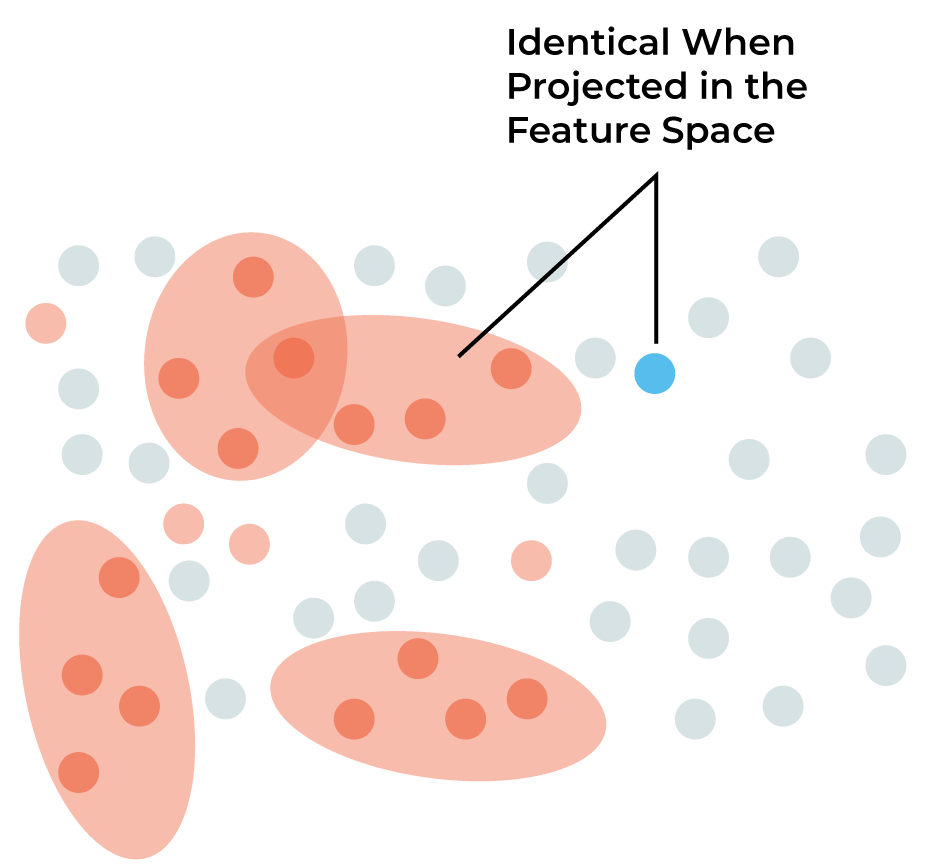

Step 3: Improve consistency by projecting requests in the feature space.

When we train a machine learning model, we often perform some data/feature engineering to reduce the number of dimensions. Thus, the raw data is projected in an N dimensional space (feature space).

This projection results in some identical data points (1 data point = 1 raw request) having inconsistent labels.

To tackle the issue, we aggregate the two points while keeping the positive label. The intuition behind this choice is that the original signature-based rule might have been too restrictive to capture incriminated points in the original space.

This step increases consistency (ensures consistency in the feature space), and slightly decreases correctness because of the assumption made.

Resulting labeled data points after label consistency correction.

Step 4: Create the final labeled dataset.

Now, we can make the assumption that every request not labeled as a malicious bot comes from a legitimate user. Our assumption results in our final dataset—which can be used to train an ML model.

Resulting dataset after label engineering (malicious requests in orange, legitimate requests in blue).

Improving Label Correctness With CAPTCHA Feedback

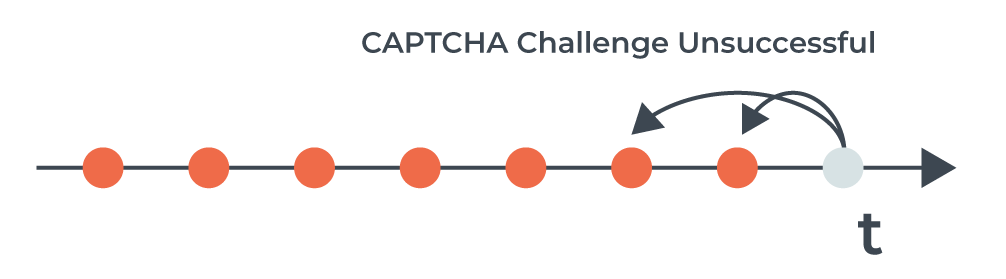

Even if we are confident in the rules applied to label requests, we want to close the gap with the ground truth. Fortunately, every time we suspect a user is sending malicious requests, we can challenge it with a CAPTCHA.

A user makes several requests before being considered as malicious and presented a CAPTCHA challenge.

Thus, the CAPTCHA result is a signal that helps qualify a human being, and we can introduce a new processing step in our label engineering process.

Every time a user is challenged with a CAPTCHA, if the CAPTCHA is passed, then we can propagate a negative label to all previous requests from the same user.

Previously suspicious requests are labeled as legitimate after a successful CAPTCHA.

On the contrary, if the CAPTCHA is not passed, we can propagate a positive label to all previous requests from the same user.

Previously suspicious requests are labeled as malicious after an unsuccessful CAPTCHA.

This allows us to improve label correctness by taking into account the CAPTCHA feedback, and will ultimately reduce our false positive rate.

Taking into account CAPTCHA feedback in bot detection label engineering.

Going Further With Weak Supervision

We have detailed how we engineer high-quality labels for our ML workloads. In the previous step of our label engineering process, we made the bold assumption that everything not previously labeled is legitimate.

However, it is likely that some malicious requests are still not labeled correctly. We want our ML approach to detect previously undetected malicious requests, which means improving label correctness even more.

So, instead of considering unlabeled requests as legitimate, we could consider creating rules to identify legitimate requests similar to those we created for malicious requests.

Adding rules to label legitimate requests (blue).

As illustrated, this would lead to overlapping rules and inconsistent labels for some data points, and also results in many requests being left unlabeled. Both issues may impact the development of effective ML models—which is where weak labeling (WL) comes into play.

WL is the process of combining noisy labels coming from various systems (like rule-based systems) in order to obtain probabilistic labels. WL allows us to improve consistency by reducing noise in labels generated via rules.

Probabilistic labels allow us to make decisions on inconsistent cases.

WL also allows us to generate weak (probabilistic) labels for previously unlabeled data points, which ultimately increases our labeled dataset size while preserving high-quality labels.

New labels can be set with confidence on previously unlabeled data points.

Once done, we can use the generated labeled dataset to train a machine learning model in a process known as weak supervision.

Leveraging Created Labels

At DataDome, we leverage label engineering processes to train supervised ML algorithms for bot detection. Taking care of consistency and correctness in a data-centric approach allows us to improve our models. For example, we improved the performance (AUC metric) of one ML model classifying requests as malicious or not by 3% using only consistency improvement.

Ultimately, generating high-quality labels for training data opens a lot of performance improvements for all our ML applications.

Conclusion

Label engineering is an essential part of an ML pipeline. It helps leverage programmatic labels at scale while improving label consistency and correctness. We demonstrated that label engineering in the context of bot detection involves domain expertise, but the overall benefits far exceed the effort required.

*** This is a Security Bloggers Network syndicated blog from Blog – DataDome authored by Florent Pajot, Machine Learning Engineer. Read the original post at: https://datadome.co/engineering/label-engineering-supervised-bot-detection-models/