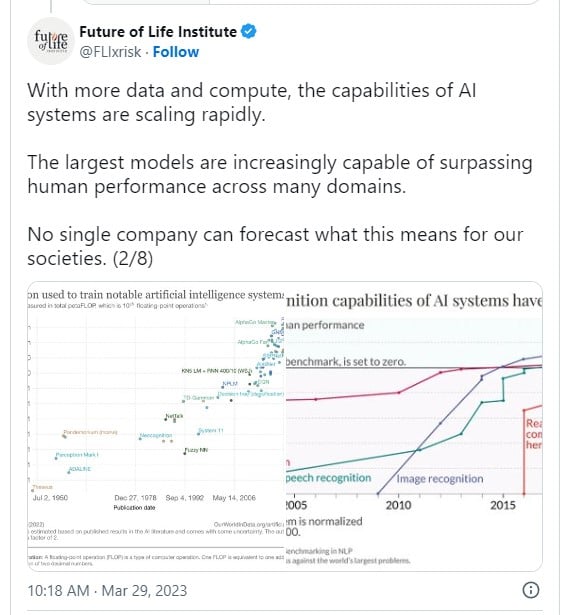

Over a thousand people, including professors and AI developers, have co-signed an open letter to all artificial intelligence labs, calling them to pause the development and training of AI systems more powerful than GPT-4 for at least six months.

The letter is signed by those in the field of AI development and technology, including Elon Musk, co-founder of OpenAI, Yoshua Bengio, a prominent AI professor and founder of Mila, Steve Wozniak, cofounder of Apple, Emad Mostraque, CEO of Stability AI, Stuart Russell, a pioneer in AI research, and Gary Marcus, founder of Geometric Intelligence.

The open letter, published by the Future of Life organization, cites potential risks to society and humanity that arise from the rapid development of advanced AI systems without shared safety protocols.

The problem with this revolution is that the potential risks have yet to be fully appreciated and accounted for by a comprehensive management system, so the technology's positive effects are not guaranteed.

"Advanced AI could represent a profound change in the history of life on Earth and should be planned for and managed with commensurate care and resources," reads the letter.

"Unfortunately, this level of planning and management is not happening, even though recent months have seen AI labs locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control."

The letter also warns that modern AI systems are now directly competing with humans at general tasks, which raises several existential and ethical questions that humanity still needs to consider, debate, and decide upon.

Some underlined questions concern the flow of information generated by AIs, the uncontrolled job automation, the development of systems that outsmart humans and threaten to make them obsolete, and the very control of civilization.

The co-signing experts believe we have reached a point where we should only train more advanced AI systems that include strict oversight and after building confidence that the risks that arise from their deployment are manageable.

"Therefore, we call on all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4", advises the open letter.

"This pause should be public and verifiable, and include all key actors. If such a pause cannot be enacted quickly, governments should step in and institute a moratorium."

During this pause, AI development teams will have the chance to come together and agree on establishing safety protocols which will then be used for adherence audits performed by external, independent experts.

Furthermore, policymakers should implement protective measures, such as a watermarking system that effectively differentiates between authentic and fabricated content, enabling the assignment of liability for harm caused by AI-generated materials, and public-funded research into the risks of AI.

The letter does not advocate halting AI development altogether; instead, it underscores the hazards associated with the prevailing competition among AI designers vying to secure a share of the rapidly expanding market.

"Humanity can enjoy a flourishing future with AI. Having succeeded in creating powerful AI systems, we can now enjoy an "AI summer" in which we reap the rewards, engineer these systems for the clear benefit of all, and give society a chance to adapt," concludes the text.

Comments

lonegull - 1 year ago

Fear perpetuated by science fiction and movies. AI is specialized to specific specialties, chat, programing, facial recognition, problem solving, searching...etc, even GPT is limited in its capabilities. If the companies, universities and groups training AI cannot effect a pause in training, how do they expect Government to effect a pause? Get together (chat, email, video conference...etc) and plan a pause, plan the safeguards and protocols for AI training and development. As with the invention of cars, computers, Internet, telephones, cell phones, televisions, cameras...etc nobody could predict or know how to mitigate the impact and problems these inventions would create over the next 30 - 100 years. This letter is just public fearmongering.

rodney1956 - 1 year ago

According to the following article on the science website ResearchGate, the future (and the past and present) would never even exist without the activity of binary digits (BITS) and the Artificial Intelligence that results from their activity. The article - "If Our Mathematical Universe Is Topological, Complex Coordinates With Real Plus Imaginary Parts Will Enable Time Travel And Magnify Learning Exponentially" - has its link supplied at the end of this comment, and the abstract (intro) is -

Cosmologist, physicist, and mathematician Max Tegmark of MIT (Massachusetts Institute of Technology in the USA) - together with Information Physics - hypothesizes that mathematical formulas create reality. According to Wikipedia, Prof Tegmark's MUH (mathematical universe hypothesis) is: Our external physical reality is a mathematical structure. That is, the physical universe is not merely described by mathematics, but is mathematics. Mathematical existence equals physical existence, and all structures that exist mathematically exist physically as well. His categorization of the universe has four levels, with level 4 being altogether different – from physical constants and quantum branches - equations or mathematical structures. These “altogether different mathematical structures” are, in this article you’re reading, proposed to be two-dimensional (2D) Mobius strips that are formed by the binary digits of 1 and 0 aka base 2 maths, and are joined as pairs into figure-8 Klein bottles which are mathematically immersed in the 3rd dimension. Then the photons and gravitons (electromagnetic and gravitational waves and fields) created by – respectively – trillions of strips and trillions of bottles interact via Vector-Tensor-Scalar Geometry to produce a united space-time (in Einstein’s term, a Unified Field) and every form of mass, including the Higgs boson and dark matter. They also interact via the Riemann hypothesis, Wick Rotation, and – in the production of other dimensions which are home to dark energy and dark matter which interact with the known dimensions - the Mobius Matrix. This Unified Field will immeasurably boost knowledge. All the knowledge that ever existed or shall exist is “out there” and every person, being part of the unified field, will sooner-or-later learn how to access the deepest past and the remotest future. It will be proposed that time travel – both to any bygone century or to any century ahead – will physically confirm or refute claimed knowledge. Astrophysicist Jeff Hester – famous for the photographs he took using the Hubble Space Telescope – wrote in his “Astronomy” magazine column about 5 years ago that although knowledge is constantly changing, the methods of acquiring knowledge remain the same. When the unified field joins forces with physical time travel into the past as well as the distant future, learning new things will be done in radically different ways eg we’ll no longer be completely dependent on learning through bodily senses like sight and hearing, or through university training.

Question – how does the Riemann hypothesis support faster-than-light travel in space and trips into the past / future? Answer – using the axiom that there indeed are infinitely many nontrivial zeros on the critical line (calculations have confirmed the hypothesis to be true to over 13 trillion places), the critical line is identified as the y-axis of Wick rotation (see the text accompanying Fig. 4). This suggests the y-axis is literally infinite and that infinity equals zero. In this case, it is zero distance in time and space (again, see the text accompanying Fig. 4). Travelling zero distance is done instantly and is therefore faster-than-light travel. Wick rotation is essential to this article’s description of a topological (mathematical) universe and the Riemann hypothesis’ identification with Wick means the hypothesis doesn’t just apply to the distribution of prime numbers but also applies to the fundamental structure of the mathematical universe’s space-time. It doesn’t apply to space alone but to time too (Einstein’s Relativity leads to faster-than-light travel making time travel possible). This article also addresses antigravitons and the static universe, as well as answering the criticism that the natural and the artificial shouldn't be mixed. The discussion takes the view that “natural” and “artificial / technological” are the same thing – and explains HOW they are the same thing.

(12) (PDF) If Our Mathematical Universe Is Topological, Complex Coordinates With Real Plus Imaginary Parts Will Enable Time Travel And Magnify Learning Exponentially. Available from: https://www.researchgate.net/publication/367561138_If_Our_Mathematical_Universe_Is_Topological_Complex_Coordinates_With_Real_Plus_Imaginary_Parts_Will_Enable_Time_Travel_And_Magnify_Learning_Exponentially [accessed Mar 31 2023].

Vgger - 1 year ago

A world-wide pause on the development of AI is simply not possible. The US does not control China or any other nation.

A pause in this country would be dangerous to our interests. The sane effort regarding AI research should focus on guardrails keeping AI directed towards human benefits.