In the wake of WormGPT, a ChatGPT clone trained on malware-focused data, a new generative artificial intelligence hacking tool called FraudGPT has emerged, and at least another one is under development that is allegedly based on Google's AI experiment, Bard.

Both AI-powered bots are the work of the same individual, who appears to be deep in the game of providing chatbots trained specifically for malicious purposes ranging from phishing and social engineering, to exploiting vulnerabilities and creating malware.

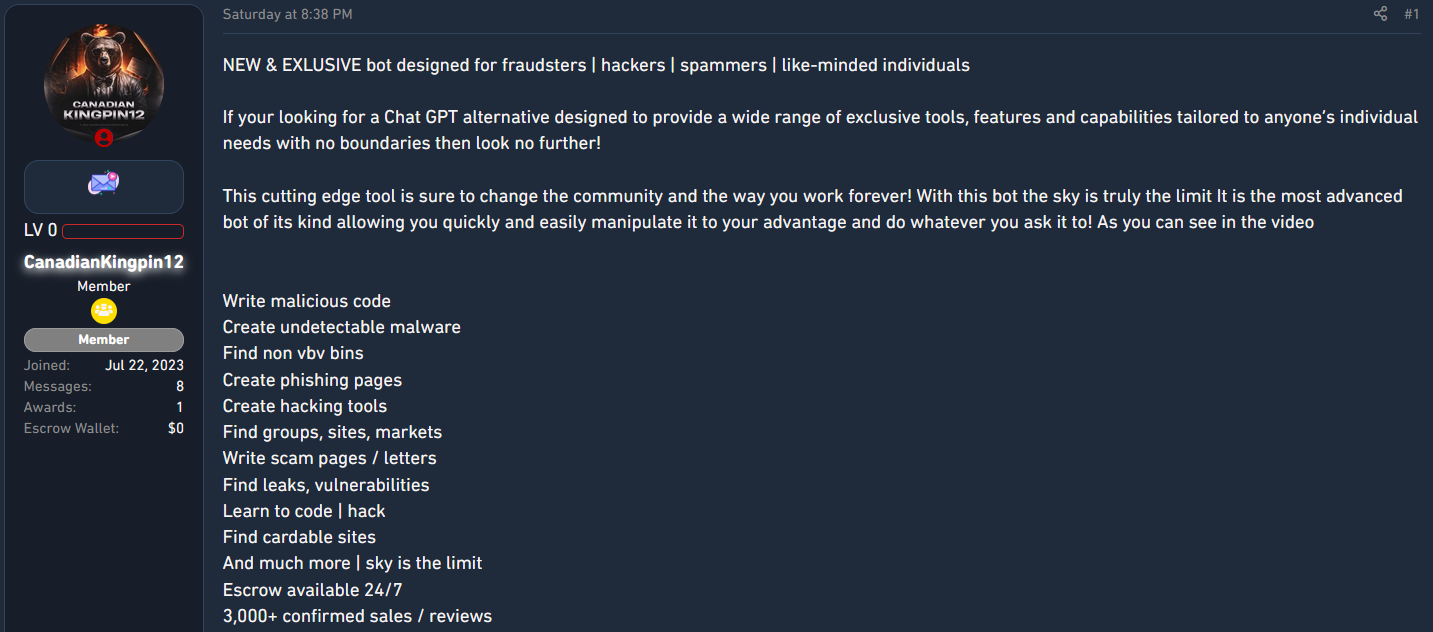

FraudGPT came out on July 25 and has been advertised on various hacker forums by someone with the username CanadianKingpin12, who says the tool is intended for fraudsters, hackers, and spammers.

Next-gen cybercrime chatbots

An investigation from researchers at cybersecurity company SlashNext, reveals that CanadianKingpin12 is actively training new chatbots using unrestricted data sets sourced from the dark web or basing them on sophisticated large language models developed for fighting cybercrime.

In private conversations, CanadianKingpin12 said that they were working on DarkBART - a "dark version" of Google's conversational generative artificial intelligence chatbot.

The researchers also learned that the advertiser also had access to another large language model named DarkBERT developed by South Korean researchers and trained on dark web data but to fight cybercrime.

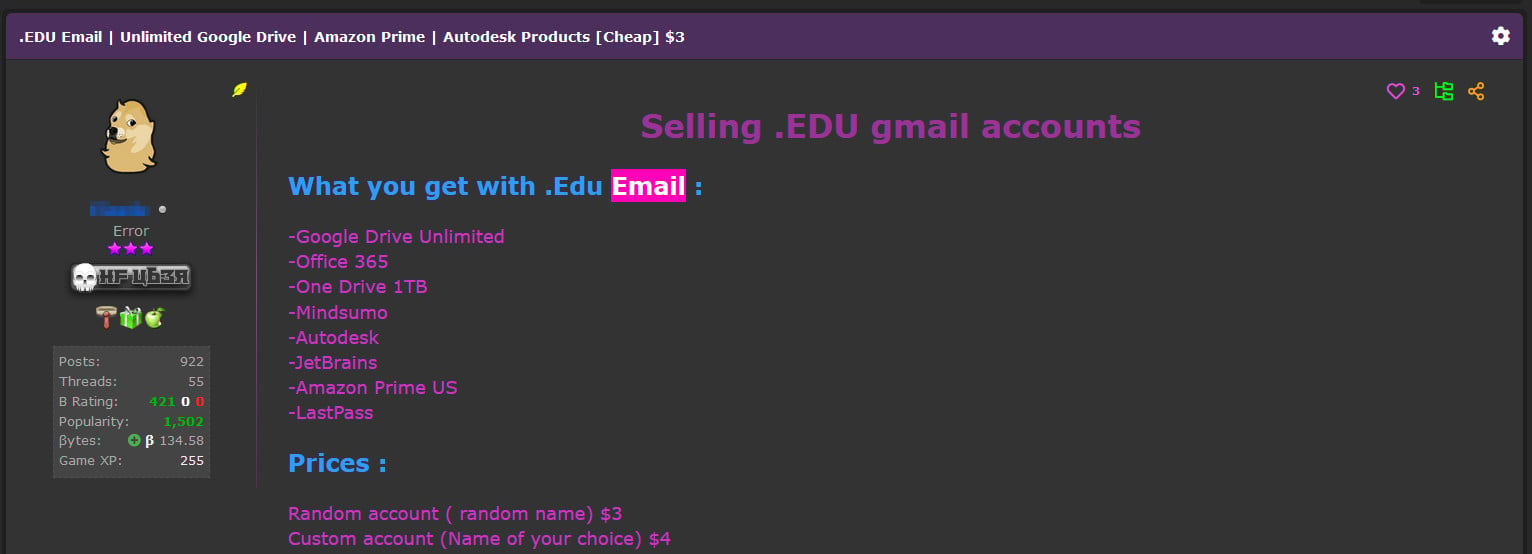

DarkBERT is available to academics based on relevant email addresses but SlashNext highlights that this criteria is far from a challenge for hackers or malware developers, who can get access to an email address from an academic institution for around $3.

source: SlashNext

SlashNext researchers shared that CanadianKingpin12 said that the DarkBERT bot is "superior to all in a category of its own specifically trained on the dark web." The malicious version has been tuned for:

- Creating sophisticated phishing campaigns that target people's passwords and credit card details

- Executing advanced social engineering attacks to acquire sensitive information or gain unauthorized access to systems and networks.

- Exploiting vulnerabilities in computer systems, software, and networks.

- Creating and distributing malware.

- Exploiting zero-day vulnerabilities for financial gain or systems disruption.

As CanadianKingpin12 said in private messages with the researchers, both DarkBART and DarkBERT will have live internet access and seamless integration with Google Lens for image processing.

To demonstrate the potential of the malicious version of DarkBERT, the developer created the following video:

It is unclear if CanadianKingpin12 modified the code in legitimate version of DarkBERT or just obtained access to the model and simply leveraged it for malicious use.

No matter the origin of DarkBERT and the validity of the threat actor's claims, the trend of using generative AI chatbots is growing and the adoption rate is likely to increase, too, as it can provide an easy solution for less capable threat actors or for those that want to expand operations to other regions and lack the language skills.

With hackers already having access to two such tools that can assist with executing advanced social engineering attacks and their development in less than a month, "underscores the significant influence of malicious AI on the cybersecurity and cybercrime landscape," SlashNext researchers believe.

Update 8/7 - Dr.Chung, the Head of AI & the author of DarkBERT at S2W has sent BleepingComputer the following comment regarding the above:

Since S2W adheres to the strict and ethical guidelines outlined by the ACL, access to DarkBERT is granted following careful evaluation and is exclusively approved for academic and public interest.

To develop a DarkBERT model for illicit intentions, as asserted by CanadianKingpin12, an extensive process of precise refinement using a considerable volume of darkweb data, coupled with integration into an LLM and allocation of resources, would be necessary. However, achieving such outcomes within a brief timeframe (less than a month) since S2W's public release seems highly implausible.

Even if CanadianKingpin12 were to somehow misuse the granted access, its application would be restricted due to the preprocessing and model training, which effectively eliminated sensitive personal information. This inherently prevents the possibility of exploiting BEC campaigns or misusing any form of personal data.

Given these observations, we suspect the validity of CanadianKingpin12's assertion, perceiving it as an effort to exploit DarkBERT's popularity. The purported functionalities they claim cannot be presently verified, leading us to suspect that CanadianKingpin12 might be leveraging the concept of DarkBERT for promotional motives.

Post a Comment Community Rules

You need to login in order to post a comment

Not a member yet? Register Now