Image: Kelly Sikkema

Columbia University researchers have developed a novel algorithm that can block rogue audio eavesdropping via microphones in smartphones, voice assistants, and connected devices in general.

The algorithm can work predictively. It infers what the user will say next and generates obstructive audible background noise (whispers) in real-time to cover the sound.

For now, the system works only with English and has a rate of success of roughly 80%. The volume of the noise is relatively low, minimizing user disturbance and allowing comfortable conversations.

As real-world tests showed, the system can make speech impossible to discern by automatic speech recognition technology, no matter what software is used and the microphone's position.

The university's announcement also promises future development to focus on more languages, where linguistics allows similar performance and make the whispering sound completely imperceptible.

A complex problem

Microphones are embedded into nearly all electronic devices today, and the high level of automated eavesdropping users experience when they get ads for products mentioned in private conversations.

Many researchers have previously attempted to mitigate this risk by using white noise that could fool automatic speech recognition systems up to a point.

However, using any of the existing real-time voice concealing methods in practical situations is impossible, as audio requires near-instantaneous computation which is not feasible with today's hardware, the researchers say.

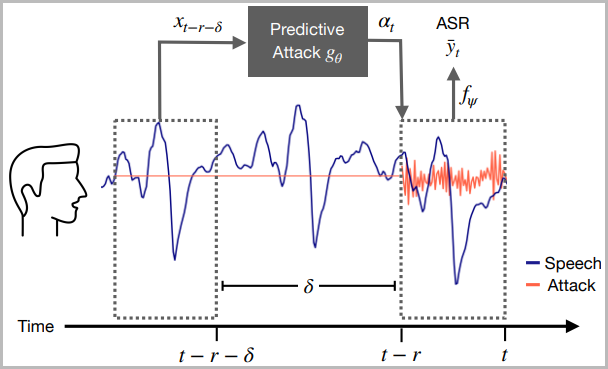

The only way to address this problem is to develop a predictive model that would keep up with human speech, identify its characteristics, and generate disruptive whispers based on what words are expected next.

Neural voice camouflage

Building upon deep neural network forecasting models applied to packet loss concealment, Columbia's researchers developed a new algorithm based on what they call a "predictive attacks" model.

That is to take into account every spoken word the speech recognition models are trained to transcribe, predict when the user will say those words, and generate a whisper at the right moment.

They trained their model for two days using eight NVIDIA RTX 2080Ti GPUs on a 100-hour speech dataset that was adjusted for this purpose with backward and forward passes.

As the researchers explain in the technical paper, they found that the optimal prediction time is 0.5 seconds into the future.

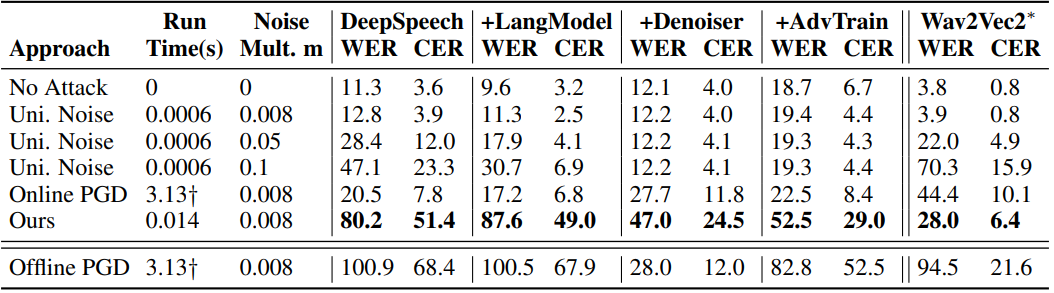

Their experiments tested the algorithm against various speech recognition systems, finding an overall induced word error rate of 80% when the whispers were deployed.

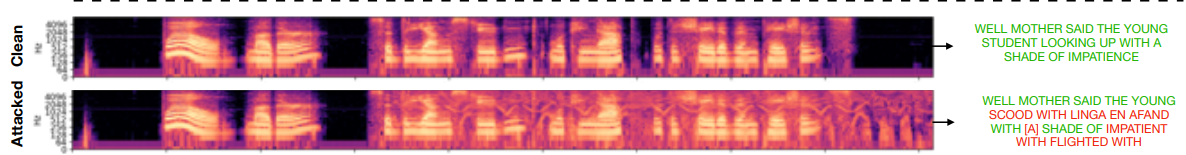

Additionally, the scientists presented some realistic in-room tests, along with the resulting text identified by speech recognition systems in each case.

.png)

Notably, the experiments showed that smaller words like "the", "our", and "they", are harder to mask, while longer words are generally easier for their algorithm to attack.

Implications

This study and the development of a speech eavesdropping disrupting system are proof of a systemic regulatory failure against unrestrained data collection for targeted marketing.

Even if these anti-spying systems are widely employed in the future, AI developers will almost certainly attempt to adjust their recognition methods to overcome disruptive whispers or reverse their effect.

As the complexity of the situation increases, the more overwhelming it will become for people to protect their privacy.

For example, deploying a silent anti-eavesdropping tool at home or in an office introduces a new point of potential risk, as even if these tools are trustworthy, targeting them to access the predictive data in real-time would essentially be indirect eavesdropping.

Comments

alex2012 - 2 years ago

Typo under Neural voice camouflage. 2nd paragraph, "and generate the a whisper at the right moment."