Ask These 5 AI Cybersecurity Questions for a More Secure Approach to Adversarial Machine Learning

NetSpi Executives

FEBRUARY 21, 2024

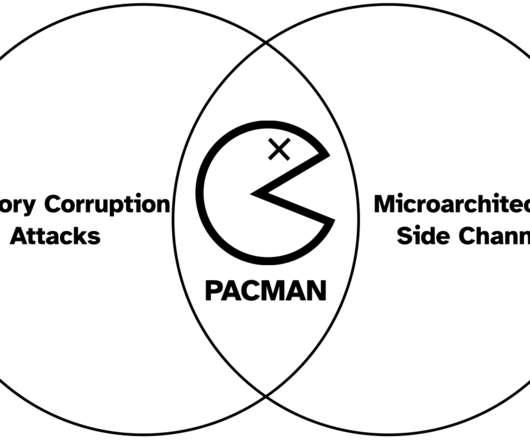

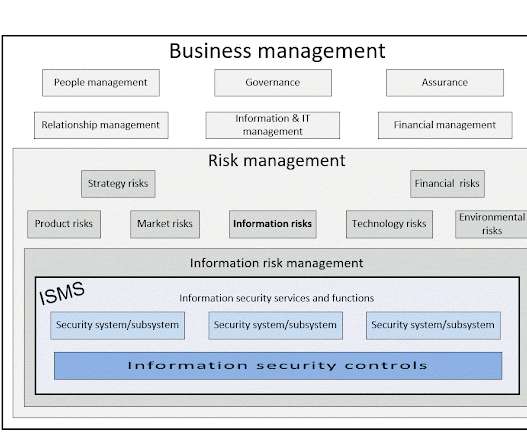

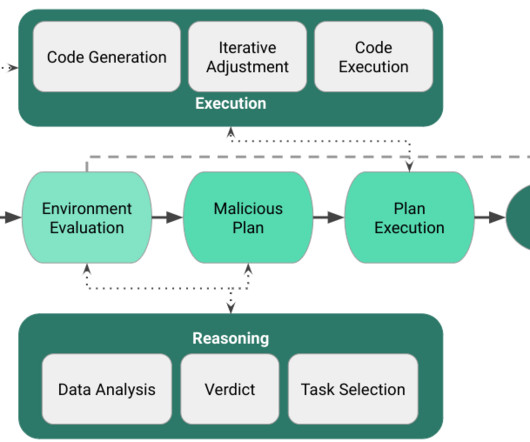

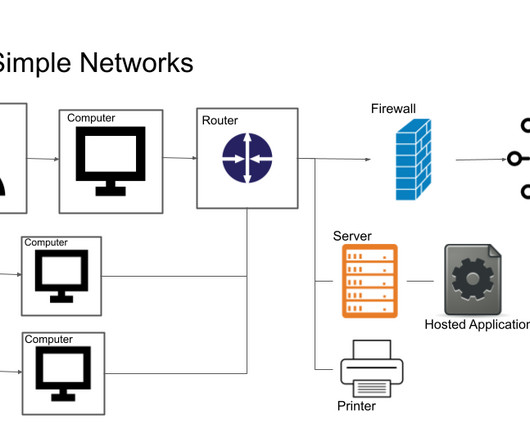

Artificial Intelligence (AI) and Machine Learning (ML) present limitless possibilities for enhancing business processes, but they also expand the potential for malicious actors to exploit security risks. How transparent is the model architecture? Will the architecture details be publicly available or proprietary?

Let's personalize your content