Applying Security Engineering to Prompt Injection Security

Schneier on Security

APRIL 29, 2025

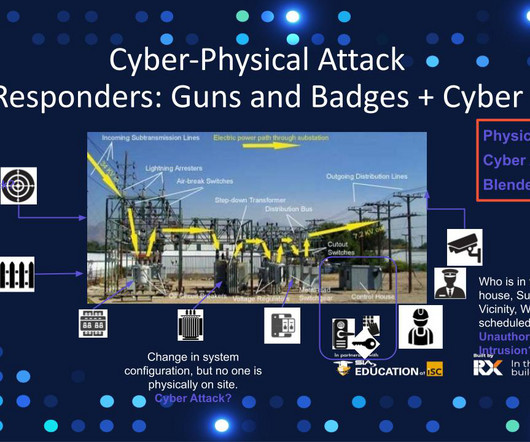

This seems like an important advance in LLM security against prompt injection: Google DeepMind has unveiled CaMeL (CApabilities for MachinE Learning), a new approach to stopping prompt-injection attacks that abandons the failed strategy of having AI models police themselves. Instead, CaMeL treats language models as fundamentally untrusted components within a secure software framework, creating clear boundaries between user commands and potentially malicious content. […] To understand CaMeL

Let's personalize your content